The human eye is an incredible tool, capable of seeing a vast range of light from the brightest sun to the faintest star. Our eyes can also adjust to different levels of brightness, allowing us to see in both bright and dark environments. This ability to adjust is called dynamic range

HUman eye dynamic range is much wider than that of a camera. This means that the camera cannot see as much contrast as our eyes can. In order to compensate for this difference, photographers must use various techniques to capture images with a wide range of contrast.

Understanding the Human Eye Dynamic Range and What is Light?

To understand how vision and cameras work, we need to understand light. This is the stimulus of vision, and we can define it in several ways.

Light is the electromagnetic radiation the human eye can detect. In other words, the visible part of the electromagnetic radiation spectrum. Humans can detect wavelengths from 380 to 700 nanometers.

According to the wave-particle duality concept, light is a particle (photon) or a wave. This means that it behaves as photons and as waves do. It consists of tiny particles but spreads in space as a wave.

For our vision and our cameras, both forms appear.

How Do Our Eyes and Cameras Capture Light?

Both our eyes and cameras are sensitive to light. This means that they react to the signals transmitted by it. They work similarly to each other but are not built the same.

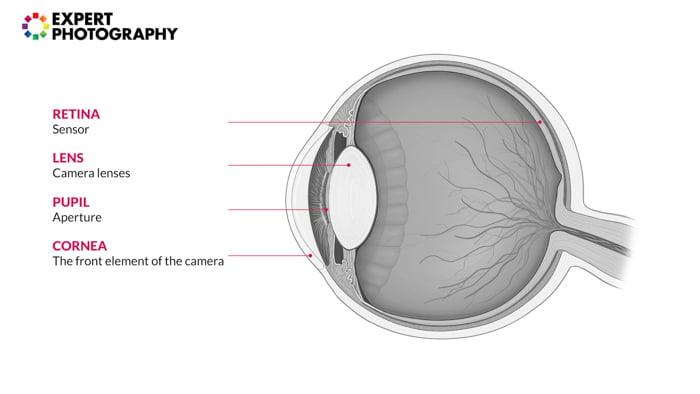

In our eyes, light first passes through the cornea. This is the front layer of the eye, like the front element of your camera. Both play an essential role in refracting the light and protecting other parts of the eye or lens.

The iris is a ring-shaped membrane behind the cornea. It has an adjustable opening in its center: the pupil. This controls the amount of light passing through. In the camera lenses, the aperture has the same function.

Behind the iris is the lens. It is a transparent crystalline structure that is flexible and changes shape for focusing. In camera lenses, there are usually more elements. The focus can be changed by moving these lenses closer or farther from the camera’s sensor.

Inside the eye, there is a photosensitive layer called the retina. The retina receives and converts light into electrical signals. These signals are then transmitted by neurons. This way, through the optic nerve, the retina sends messages to the brain. The ‘retina’ of the camera is the sensor.

The image appearing on the retina or the sensor is inverted upside down and sidelong. Our brain rotates it.

What is the Resolution of the Human Eye?

The main difference between the retina and a sensor is that the former is curved, as it is part of the eyeball. Also, it contains more cells than the number of pixels in a camera sensor. It has about 130 million cells, 6 million sensitive to colors (the cones).

In a camera sensor, the pixel density is even. In the eye, there are more cells in the middle of the retina.

Let’s say that the resolution of our eye is 130MP. Due to the fast and constant movement of the eyeball, in reality, it is around 576MP. Not to mention that our eye’s resolution does not have to consider the resolving power of a lens.

We also have to mention that the cells sensitive to light (the rods) are turned off in brightness. They help our vision in low-light conditions. It is precisely the opposite in low-light because only the rods are active then. This is why we cannot see colors around twilight.

Also, with aging, our eyes lose some of these cells and our brains adapt to that. So an eye does not need its resolution value, as vision depends on many other things.

So, due to the high number of cells in the retina, we can say that the human eye is approximately 576MP. It does not mean the same as in photography, but it is an interesting comparison. This way, we can see the powerful capability of our eyes photographically.

Understanding Human Field of View

We often hear that a 50mm lens on a full frame camera is the closest to the human field of view.

We call the 50mm a standard lens because the focal length is equal to the diagonal size of its sensor. Our eyes’ focal length is approximately 22mm. So it is not a standard lens because it has the same focal length or angle of view as the eye.

As we have two eyes, human vision is approximately 210 degrees of horizontal arc. This does not mean we can see sharp at 210 degrees, as most of it is peripheral vision. We cannot have everything around us in focus. We can only detect movement and shapes near the edges. That is why we are constantly moving our eyes (saccadic eye movement).

A 50mm lens has 46 degrees angle of view. The center of our field of vision, around 40-60 degrees, is where we get most of the information. This means that our perception depends on this part. It is close to the 50mm angle of view.

What is the Dynamic Range of the Human Eye?

Dynamic range is an interesting topic when we compare cameras to our eyes. When looking at a scene, our eye behaves more like a video camera.

It is constantly adjusting to the lighting conditions. This means that we do not only ‘expose’ to the bright or the dark areas of the scene.

It can happen due to our rapid eye movements. Our eye is always moving, allowing us to measure the light in all parts of the scene. This way, we can adjust the pupil to the light conditions.

This difference is visible when we are shooting a subject that is lit from behind. With our camera, we can capture a silhouette, but our eyes will still see details in darker places.

What is the Eye’s ISO?

We cannot measure the sensitivity of a human organ exactly as that of an artificial film or sensor. If we would like to compare the two, the ISO of the eye is estimated at around 1 in bright light. And it’s around 500-1000 among darker light conditions.

Conclusion

It is clear why we would draw a parallel between our eyes and our cameras. But we have to admit that we cannot copy the exact mechanism of our vision.

Digital cameras cannot compete with the complexity of the eye and the brain. Do not forget that our vision depends on our brain. Even psychological factors affect our perception.

Put your newfound knowledge to the test, comparing your camera and eye with our Macro Magic course.